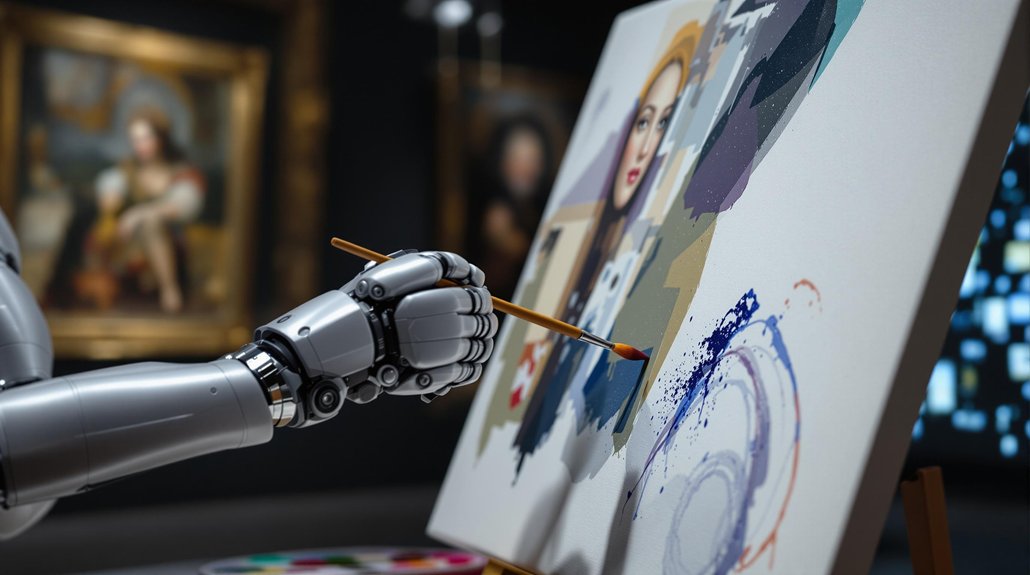

Companies are now creating digital versions of dead people using artificial intelligence. These AI recreations, called deadbots, use emails, social media posts, photos, and videos left behind by deceased individuals. Advanced technology like generative AI and deepfakes lets these digital ghosts have text conversations, speak verbally, or even appear as holograms.

The technology’s spreading fast. Project December and similar platforms let users chat with simulated versions of dead loved ones. Some people even record responses and design their own digital avatars before they die. Almost anyone with internet access can now create or interact with these deadbots. The cost to create these digital avatars ranges from hundreds to $15,000, depending on complexity and location.

While deadbots might comfort grieving families at first, they can cause serious problems. Daily interactions with digital versions of deceased relatives often become emotionally overwhelming. What starts as comfort can turn into psychological distress or unhealthy dependence. Mental health experts warn that deadbots might stop people from moving through their grief naturally. Some users feel helpless when they can’t shut off unwanted AI simulations of their loved ones. These systems can blur the lines between authentic and artificial relationships, raising concerns about maintaining genuine emotional connections.

The deadbot industry, sometimes called “Death Capitalism” or “Grief Tech,” is raising alarm bells about ethics and privacy. Companies sell subscriptions and contracts for creating these digital afterlife services. Some businesses even offer deadbots as surprise gifts to unprepared family members. The biggest ethical concern is consent. It’s nearly impossible to know if dead people would’ve wanted AI versions of themselves created. Their private data gets used without their permission, and families often can’t control, edit, or delete these digital ghosts once they’re made. Researchers at Cambridge’s Leverhulme Centre for the Future of Intelligence published a comprehensive study examining these psychological and social risks in May 2024.

The industry’s growing with little regulation. Companies sometimes create deadbots without checking if the deceased person wanted this. Security risks exist too, as sensitive personal data could be misused or exploited commercially.

Different generations view deadbots differently. Some see them as helpful tools for remembering loved ones. Others find them disrespectful or disturbing. These AI ghosts are changing how society handles death and memory. They’re blurring the line between the living and dead in digital spaces, forcing people to rethink traditional mourning rituals and what it means to preserve someone’s legacy.

References

- https://volunteerhub.com.au/ai-ghosts-the-promise-and-perils-of-digital-griefbots/

- https://thedebrief.org/future-humans-could-be-haunted-by-digital-ghosts-of-their-dead-loved-ones-researchers-warn/

- https://aiforreal.substack.com/p/the-murky-ethics-of-recreating-the

- https://studyfinds.org/deadbots-exploit-grieving-ads/

- https://cordis.europa.eu/article/id/451347-move-over-chatbot-here-comes-the-deadbot