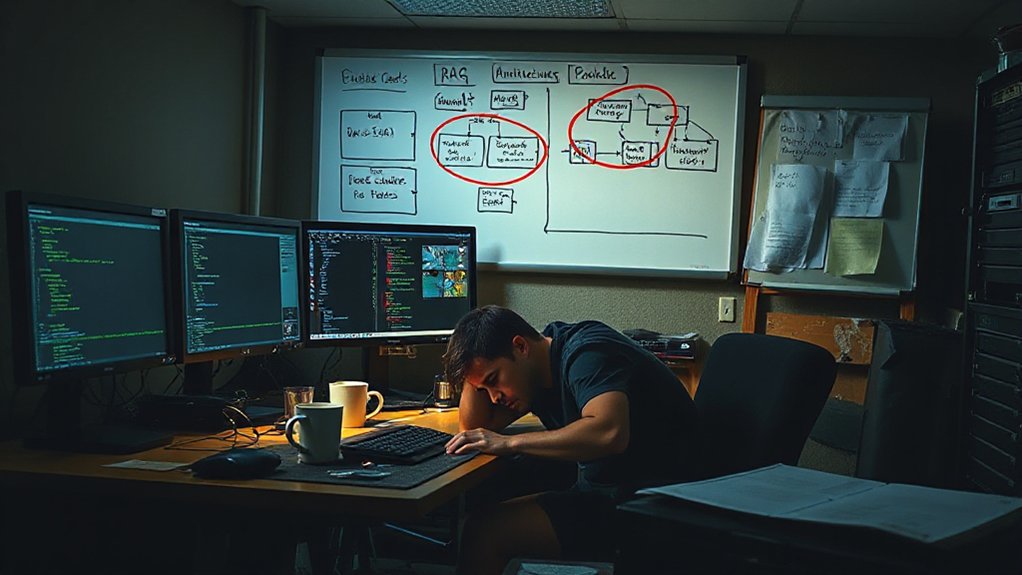

While companies rush to deploy RAG (Retrieval-Augmented Generation) chatbots, many are missing critical flaws in their testing approaches. These systems often look impressive in demos but fail in real-world use because teams aren’t testing what really matters. The gap between how chatbots perform in controlled tests versus actual deployment is growing wider.

RAG chatbots impress in demos but crumble in the real world when companies neglect proper testing protocols.

A key problem is the mismatch between retrieval and generation. Even when a chatbot pulls the right documents, it might still create answers that aren’t supported by those documents. Standard tests treat the system as a black box, missing cases where good retrieval still leads to wrong answers. Companies need detailed maps showing which source chunks should answer specific questions, but these are expensive to build.

Most testing relies on benchmarks that don’t represent real-world conditions. Public test datasets rarely match a company’s specific knowledge domain. Small test collections make retrieval look better than it will perform with larger, messier data. Simple relevant/irrelevant labels miss the importance of ranking and partial matches.

Automated metrics create false confidence. Numbers like precision and recall don’t show if the final answer used retrieved information correctly. Embedding similarity scores might miss factual errors. Single scores hide multiple types of failures that need separate checks. Establishing feedback loops between testing results and model refinement is crucial for continually improving chatbot performance. Implementing a confusion matrix categorization system can significantly improve how chatbot responses are evaluated and classified.

Testing rarely covers security risks. Few test suites check for prompt injection attacks where users try to make the chatbot ignore retrieved evidence. Tests seldom simulate poisoned retrieval results or examine how different context lengths affect answers. When prompt templates change, tests often stay the same, missing new problems. Similar to how AI systems face vulnerabilities to data poisoning attacks, RAG systems need specialized defenses.

The danger increases when chatbots access frequently updated knowledge bases. Tests might not catch failures caused by document versions that are out of sync. Without thorough testing across these blind spots, RAG chatbots remain vulnerable to embarrassing and potentially harmful failures that could have been prevented.

References

- https://www.searchunify.com/resource-center/sudo-technical-blogs/best-practices-for-using-retrieval-augmented-generation-rag-in-ai-chatbots

- https://hatchworks.com/blog/gen-ai/testing-rag-ai-chatbot/

- https://dzone.com/articles/traditional-testing-ragas-strategy-ai-chatbots

- https://dev.to/satyam_chourasiya_99ea2e4/how-to-validate-rag-based-chatbot-outputs-frameworks-tools-and-best-practices-for-reliable-2k1a

- https://redbotsecurity.com/rag-testing-ai-validation/

- https://www.evidentlyai.com/llm-guide/rag-evaluation

- https://latenode.com/blog/ai-frameworks-technical-infrastructure/rag-retrieval-augmented-generation/rag-evaluation-complete-guide-to-testing-retrieval-augmented-generation-systems

- https://www.confident-ai.com/blog/how-to-evaluate-rag-applications-in-ci-cd-pipelines-with-deepeval

- https://aws.amazon.com/what-is/retrieval-augmented-generation/