Many computer users are now setting up their own private Large Language Models (LLMs) at home. This trend is growing as people look for AI options that don’t share their data with large companies. Running these AI systems requires specific hardware and software, but it’s becoming more accessible to average users. Users appreciate that local LLMs protect privacy by keeping all data on their own devices rather than sending it to cloud servers.

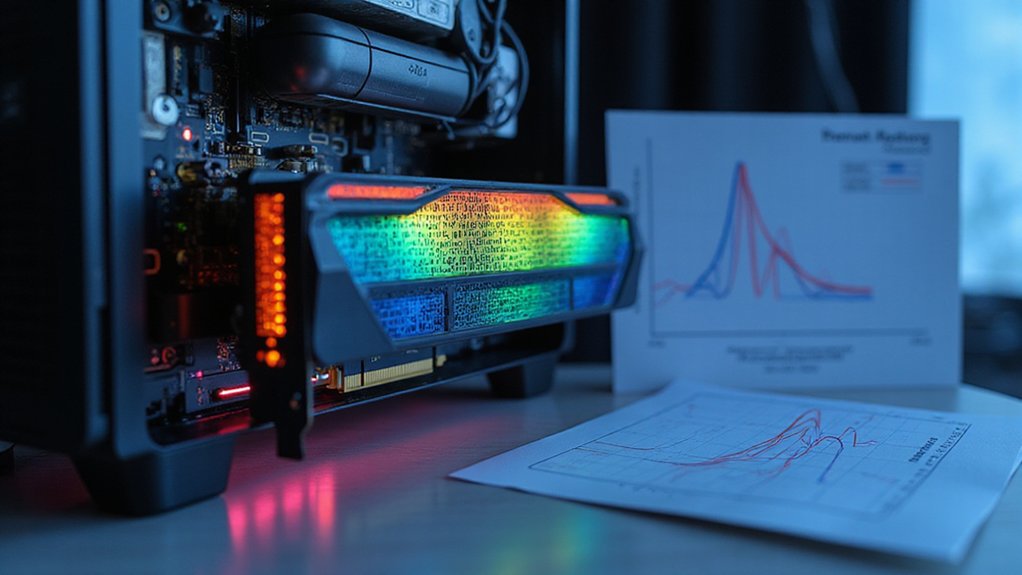

For basic LLM use, computers need at least 16GB of RAM, though 32GB works better for larger models. A dedicated graphics card with 8GB of video memory helps the AI run smoothly. Fast SSD storage of 500GB or more stores the AI models and operating system. Users with more powerful needs might build systems with multiple high-end graphics cards and 128GB of RAM. The development of quantum computing could eventually revolutionize how these models process information, handling multiple AI calculations simultaneously with unprecedented speed.

Several software options make running private AI easier. Ollama offers a simple command-line tool for Mac, Linux, and Windows. LM Studio provides a user-friendly interface for managing AI models. Other options include text-generation-webui for advanced users and GPT4All for computers with less powerful hardware.

Linux operating systems, especially Ubuntu LTS versions, work best with AI tools. Windows and Mac also work with most LLM platforms, but Linux offers better compatibility with AI libraries. NVIDIA graphics cards have the widest software support for AI tasks.

The size of the AI model affects how much computer power it needs. Larger models perform better but require more resources. Many users choose “quantized” models that use less memory but might be slightly less accurate. Popular model choices include LLaMA 2, Mistral, Dolphin, and Serge. These models use transformer architectures that enable them to understand and generate human-like text responses.

Graphics cards process AI tasks much faster than CPUs alone. While it’s possible to run some models on just a CPU, the responses will be slow. Some smaller models can even run on newer laptop chips like Apple’s M1/M2/M3 series.

Users can optimize performance by using smaller models, ensuring good cooling for their computer, and monitoring memory usage during AI conversations.

References

- https://techsonsite.com.au/2025/09/18/run-llm-at-home-setup-hardware-guide/

- https://www.geeksforgeeks.org/deep-learning/recommended-hardware-for-running-llms-locally/

- https://www.pugetsystems.com/labs/articles/tech-primer-what-hardware-do-you-need-to-run-a-local-llm/

- https://blog.n8n.io/local-llm/

- https://revelry.co/insights/how-to-run-a-private-llm-server-on-a-laptop/

- https://www.plural.sh/blog/self-hosting-large-language-models/

- https://community.home-assistant.io/t/local-llm-hardware/835569