A CADA radio station deceived listeners by presenting “Thy,” an AI host, as a real person for six months. The station used AI-generated images and borrowed employee likenesses without disclosure. When media reports exposed the truth, listeners expressed anger and betrayal across social media. The incident sparked urgent discussions about AI ethics in broadcasting and prompted regulatory responses from ACMA. This controversy highlights growing concerns about media authenticity in the AI era.

After masquerading as a human radio personality for nearly six months, the revelation that Sydney’s CADA radio station used an AI host named “Thy” has ignited widespread anger among listeners. The station promoted Thy as a real person, using AI-generated images and borrowing the likeness of actual employees without disclosing the truth to its audience.

CADA created Thy using ElevenLabs technology, which produces realistic speech that mimics human radio hosting styles. Marketing materials promised listeners companionship and engagement from what they believed was a human host. This deception was maintained until media reports exposed the truth.

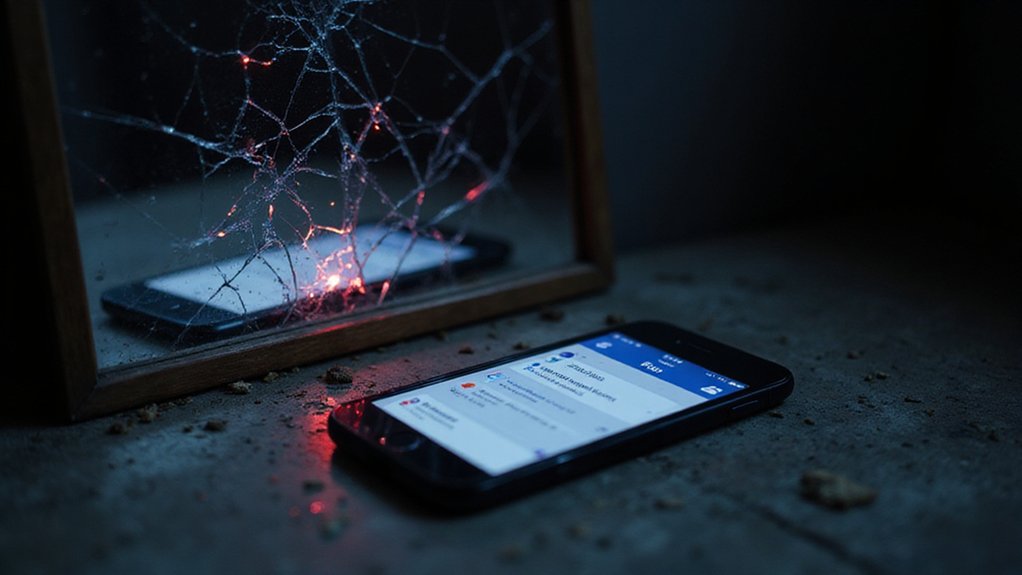

Listeners have expressed deep feelings of betrayal across social media platforms. Many have questioned whether they’ll continue supporting the station. Comments emphasize the importance of honesty in broadcasting, with fans feeling misled by a voice they had grown to trust.

The incident has prompted urgent discussions about AI ethics in media. Industry professionals and voice actor associations have condemned the practice, warning that normalizing such deception could seriously damage public trust in broadcasting. Teresa Lim, a voice actor, expressed particular offense at what she called an industry-first move. The case exemplifies the ethical challenges highlighted by shared values including transparency and fairness that major AI ethics frameworks emphasize. Experts have drawn parallels to AI-powered disinformation campaigns and their harmful effects on society.

The Australian Communication and Media Authority (ACMA) has responded by highlighting the urgent need for AI “guardrails” in broadcasting. Political leaders are now reviewing laws on AI transparency, while advocacy groups demand mandatory disclosures for all AI-generated content.

Some organizations are calling for financial penalties for media outlets that use AI without proper notification to audiences. The controversy has exposed vulnerabilities in the relationship between broadcasters and listeners, raising concerns about growing skepticism toward media authenticity. The absence of proper human oversight in this AI application represents a critical failure in responsible media management.

The backlash intensified when it became clear the station had no intention of revealing Thy’s true nature until forced to do so. Critics describe this as an erosion of trust in traditional broadcasting at a time when distinguishing between real and artificial content is increasingly difficult.

References

- https://san.com/cc/australian-radio-station-used-ai-host-for-months-without-listeners-knowing/

- https://www.instagram.com/reel/DJIHWLfpi5F/

- https://thehackernews.com/2025/02/ai-powered-deception-is-menace-to-our.html

- https://dailyai.com/2025/04/radio-station-slammed-for-pretending-ai-host-is-a-real-person/

- https://thedailyguardian.com/australia/australian-radio-station-faces-backlash-over-ai-host-deception-without-listener-notification/