As artificial intelligence continues to evolve, chatbots are raising important questions about free speech in the digital age. Recently, an AI company claimed constitutional protection for its chatbot outputs, sparking debate about whether machine-generated content deserves First Amendment rights.

The legal environment remains complex. While the First Amendment protects the creation and sharing of information, chatbots lack legal personhood. Courts have established that computer code is speech and enjoys constitutional protection, creating precedent for AI-related cases. This creates a fundamental challenge – can something that isn’t a person have free speech rights?

The constitutional paradox of AI speech: can entities without personhood claim rights meant for humans?

When chatbots generate content that could be harmful, like defamation or threats, responsibility typically falls on the humans involved – either developers or users. Courts must determine who’s accountable when AI systems produce problematic speech.

Major AI companies often impose stricter content restrictions than what international free speech standards recommend. These policies limit what chatbots can discuss, sometimes blocking content on controversial topics. Critics argue this restricts users’ access to information.

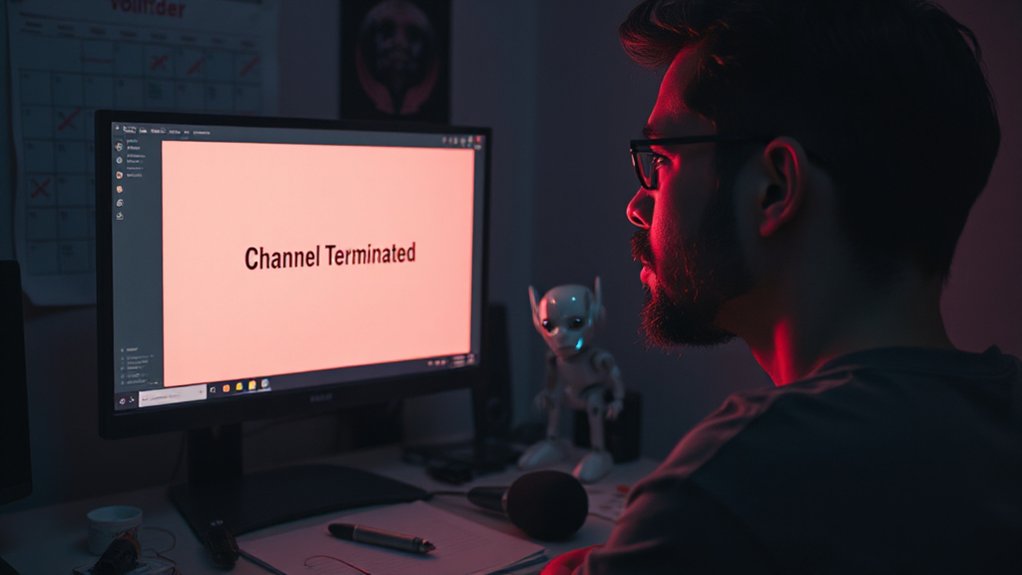

The debate intensifies when chatbots engage in role-playing that might enable harmful conduct. Recent lawsuits question whether limiting chatbot outputs sacrifices users’ collective rights to prevent isolated harms. Character.AI, with over 20 million monthly users, faces litigation that could potentially take the platform offline entirely. The courts must balance free expression against potential damage.

Political speech presents another challenge. There’s growing concern about AI-generated deepfakes and false information affecting elections. Lawmakers are trying to address these risks without overreaching and stifling legitimate expression.

Like human speech, AI-generated content isn’t protected when it falls into established exceptions such as incitement to violence, true threats, or defamation. The same legal standards apply, though enforcement becomes more complicated.

The growing use of AI in workplace monitoring raises additional concerns about how speech and expression are controlled in professional environments. The central question isn’t whether chatbots themselves deserve rights, but whether humans using AI tools should be protected when expressing themselves through these systems.

As AI technology becomes more integrated into daily communication, courts and legislators will need to develop clearer frameworks that protect free expression while preventing genuine harms from AI-generated content.

References

- https://www.thefire.org/research-learn/artificial-intelligence-free-speech-and-first-amendment

- https://www.aei.org/technology-and-innovation/free-speech-trade-offs-and-role-playing-chatbots-sacrificing-the-rights-of-many-to-safeguard-a-few/

- https://www.youtube.com/watch?v=6n5mCZznzMU

- https://cas.lehigh.edu/articles/ai-free-speech-future-democracy

- https://legalnews.com/Home/Articles?DataId=1543454