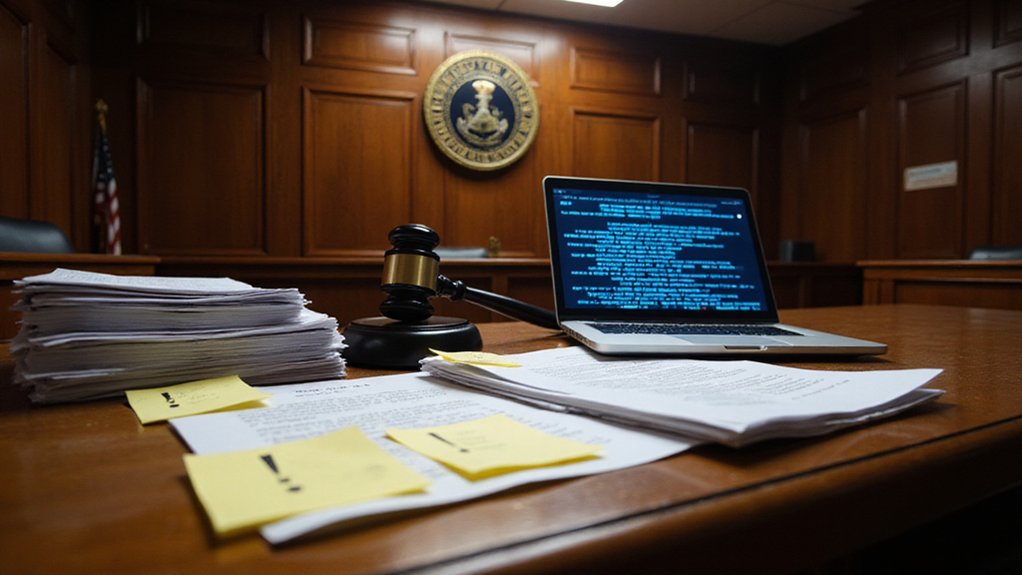

As the legal industry rapidly embraces artificial intelligence, AI-generated legal briefs are becoming increasingly common in law firms across the country. Recent data shows that the legal profession has the highest GenAI adoption rate among all professionals, with 28% of law firms using these technologies as of 2025. These AI systems sometimes produce convincing but false narratives that can undermine legal arguments when not properly verified.

Federal judges are now sounding alarms about the risks of unchecked AI use in court filings. In a strongly worded opinion, one judge warned attorneys of serious sanctions for submitting AI-generated documents without proper verification. These sanctions could include monetary penalties, case dismissals, or professional disciplinary actions.

Unchecked AI use in legal filings risks severe sanctions, including monetary penalties and case dismissals.

The concern centers on AI hallucinations – fabricated information that appears factual but isn’t. Benchmark testing reveals that legal-specific AI models hallucinate in 1 out of 6 queries, while general-purpose chatbots are even worse, with hallucination rates between 58% and 82% on legal questions. These errors can lead to false citations, misrepresentation of legal precedents, and faulty legal reasoning.

“The duty of competence requires attorneys to understand the tools they’re using,” explained a court spokesperson. “Responsibility for AI-generated content ultimately falls on the attorney submitting the work.”

Courts are updating their rules to address this growing issue. Many jurisdictions now require lawyers to disclose when AI generates substantial portions of legal briefs. Judges expect attorneys to verify all citations and legal arguments before submission.

Despite these challenges, 72% of legal professionals view AI as a positive force in their profession. Law firms continue to adopt these technologies for contract drafting, legal research, and document review to enhance productivity. A concerning 52% of legal organizations currently lack GenAI usage policies, creating significant risk for unchecked AI applications in court filings.

Client relationships are also changing. Clients increasingly expect transparency about AI use in their legal matters, with law firms balancing efficiency gains against maintaining personalized service. The legal community is increasingly advocating for industry-wide codes of ethics to govern AI use in legal practice.

As one legal technology expert noted, “AI can pass the bar exam with flying colors, but knowing when and how to use it properly in court remains uniquely human.”

References

- https://legal.thomsonreuters.com/blog/genai-report-executive-summary-for-legal-professionals/

- https://legal.thomsonreuters.com/blog/how-ai-is-transforming-the-legal-profession/

- https://hai.stanford.edu/news/ai-trial-legal-models-hallucinate-1-out-6-or-more-benchmarking-queries

- https://getciville.com/how-will-law-firms-use-ai-in-2025/

- https://research.aimultiple.com/generative-ai-legal/