When did creating a perfect digital clone of someone become easier than making a decent cup of coffee? Welcome to 2025, where deepfake videos are multiplying at 900% annually and nobody can tell what’s real anymore. Not the experts. Not the fancy detection software. Definitely not your average person scrolling through social media.

The numbers are frankly terrifying. Deepfake fraud cases shot up 1,740% in North America between 2022 and 2023. Financial losses? Over $200 million just in the first quarter of 2025. Remember that company Arup? Criminals used a deepfake to impersonate their CFO on a live video call and walked away with $25 million. A video call. With multiple people watching. The scammers even populated the call with fake employees who looked and sounded legitimate enough to dismiss any initial doubts.

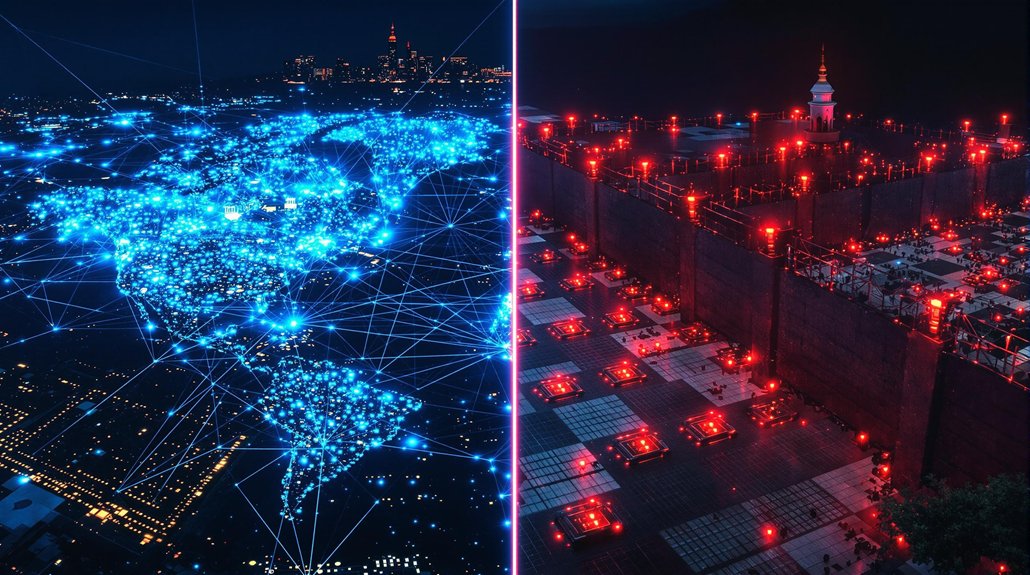

State actors and cybercriminals are pouring money into this tech faster than defense mechanisms can keep up. It’s an arms race where the bad guys have rocket ships and the good guys are still building bicycles. Those state-of-the-art deepfake detectors everyone’s banking on? They lose nearly half their accuracy the moment they leave the lab. Real world: 1, Detection tech: 0. Deloitte predicts this nightmare will balloon into $40 billion in AI-enabled fraud by 2027.

Humans aren’t doing much better. People can spot deepfakes with about 55-60% accuracy. That’s barely better than flipping a coin. Meanwhile, the technology behind these digital doppelgangers keeps getting scarier. Generative Adversarial Networks—basically AI systems that teach themselves to lie better—form the backbone of most deepfake operations. Voice cloning needs just a few seconds of audio now. Video synthesis syncs lip movements perfectly to generated speech. The same inaccuracies plaguing facial recognition technology have now become vulnerabilities that deepfake creators exploit to target specific demographics.

The truly messed up part? Jailbroken AI models are floating around the dark web, helping criminals craft attacks that sidestep detection entirely. These tools scrape personal data, analyze speech patterns, study mannerisms. They’re building digital puppets so convincing that traditional security protocols might as well be tissue paper.

Business executives are sweating bullets. Twenty-eight percent call cyber threats their biggest nightmare, yet 15% admit they’re basically defenseless against deepfakes. Political figures? Prime targets. Governments are scrambling as synthetic imposters of officials pop up everywhere, requesting sensitive information, spreading chaos.

The creation tools will always outrun the detection tools. That’s the ugly truth nobody wants to admit.

References

- https://www.beazley.com/en/news-and-events/deepfakes-the-latest-weapon-in-the-cyber-security-arms-race/

- https://www.federalreserve.gov/newsevents/speech/barr20250417a.htm

- https://www.weforum.org/stories/2025/07/why-detecting-dangerous-ai-is-key-to-keeping-trust-alive/

- https://www.lowyinstitute.org/the-interpreter/deepfakes-nuclear-weapons-why-ai-regulation-can-t-wait

- https://www.securityweek.com/deepfakes-and-the-ai-battle-between-generation-and-detection/