Prosecutors kept using facial recognition software to build cases against suspects. They just forgot to mention it to judges. Or defense attorneys. Or anyone, really.

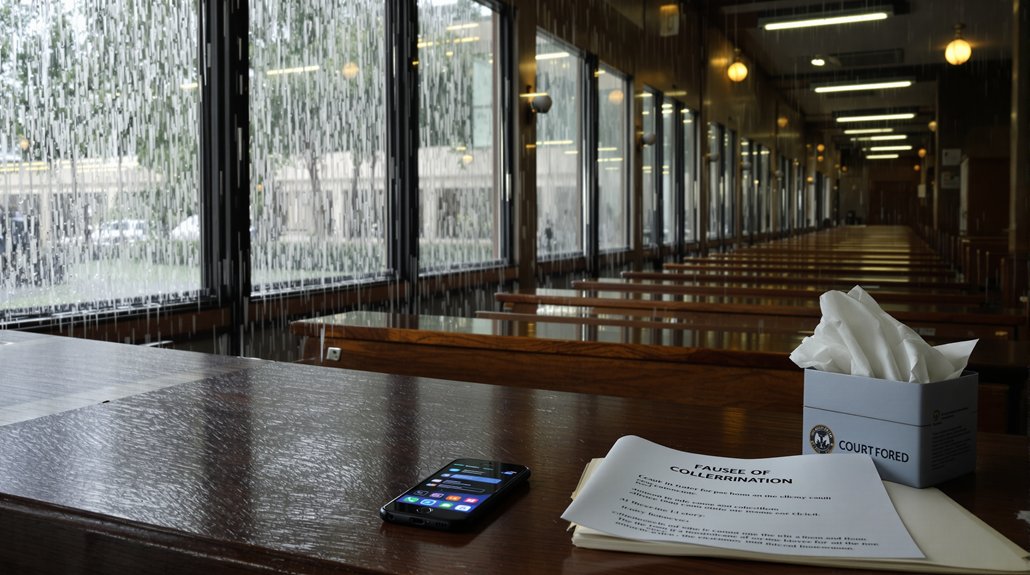

A Cleveland homicide investigation shows exactly how this game works. Police ran Clearview AI facial recognition, which spit out multiple photos of multiple people, including their suspect. When prosecutors went for a search warrant, they somehow left out that tiny detail about using AI. The trial court in Cuyahoga County wasn’t amused. They suppressed all the evidence obtained under that warrant, calling it invalid because nobody mentioned the facial recognition part.

Now prosecutors are scrambling, appealing the suppression in Ohio’s 8th District Court of Appeals in State v. Tolbert. An amicus brief basically spelled it out: hiding AI facial recognition means judges can’t properly assess whether there’s reliable probable cause. The pathway is painfully obvious. AI generates a candidate list, cops focus on someone, they get a warrant while conveniently forgetting to mention the AI part, then everything blows up when the defense finds out.

Hiding AI facial recognition means judges can’t properly assess whether there’s reliable probable cause.

Other states are catching on to this nonsense. New Jersey v. Arteaga now requires defendants be told when facial recognition was used. They get details about the algorithm, system settings, image quality, and any alterations that might jack up error rates. This transparency requirement addresses the ethical concerns surrounding autonomous systems making crucial decisions with minimal human oversight. Colorado and Virginia have gone further, establishing testing standards for facial recognition accuracy to prevent unreliable systems from being used in criminal cases.

Detroit had to settle a case and now mandates informing defendants about facial recognition use.

The technology itself is sketchy enough. These systems pump out candidate lists, not definitive matches. At least six people, all Black, have been falsely accused after facial recognition matches. Low-quality surveillance images make things worse. Cops sometimes alter probe images, which one report called a “frequent problem.” Clearview AI’s own documentation includes disclaimers about the reliability of its results, yet law enforcement conveniently omits this when seeking warrants.

By the end of 2024, fifteen states enacted laws limiting police facial recognition use. Detroit now requires officer training on the risks and prohibits arrests based solely on facial recognition hits. Documentation requirements for system parameters and candidate lists are becoming standard.

The message is getting clearer. Hide the AI, lose your evidence. Prosecutors who think they can sneak facial recognition past everyone are learning the hard way that courts aren’t playing along anymore.

References

- https://www.acluohio.org/press-releases/aclu-ohio-files-amicus-brief-raising-concerns-about-use-facial-recognition-technology/

- https://techpolicy.press/status-of-state-laws-on-facial-recognition-surveillance-continued-progress-and-smart-innovations

- https://innocenceproject.org/news/when-artificial-intelligence-gets-it-wrong/

- https://clarkstonlegal.com/law-blog/flawed-facial-recognition-leads-to-felony-charge/

- https://www.everlaw.com/blog/ai-and-law/unlocking-justice-ai-evidence-analysis-forensics/