Tech giants increasingly harvest creative content under the guise of “AI training.” Companies like OpenAI and Google scrape millions of works without proper authorization, claiming “fair use” despite potential violations of terms of service. Recent data breaches at LinkedIn and other platforms exposed billions of user records, highlighting widespread security vulnerabilities. Content creators face unauthorized use of their work while legal frameworks struggle to address these practices. The tension between innovation and data theft continues to evolve.

How safe is your personal information online? Recent evidence suggests not very. Tech giants have repeatedly exploited user data under the guise of “AI training,” raising serious concerns about privacy and copyright.

In 2018, Cambridge Analytica exploited Facebook’s API, compromising data from up to 90 million users without consent. This scandal revealed how personal information can be weaponized for political purposes. Likewise, OpenAI transcribed over one million hours of YouTube videos in 2021, likely violating YouTube’s terms of service.

Data breaches have reached alarming levels. The U.S. reported 1,862 breaches in 2021, a 68% increase from the previous record. LinkedIn faced a massive scrape affecting about 93% of its user accounts, exposing emails and location data of 700 million users. Even tech giant Yahoo wasn’t immune, suffering breaches that exposed over 3 billion accounts. Marriott International’s 2018 breach compromised 500 million guests’ personal information, including passport numbers and credit card details.

The rising tide of data breaches threatens everyone—no company is too large to fall, no user too insignificant to target.

Companies often justify unauthorized data scraping as “fair use” for AI development. OpenAI’s Whisper project and Google’s AI training methods have both raised significant copyright and ethical concerns. These companies prioritize building vast training datasets over respecting individual privacy rights. The proliferation of AI-generated content has led to serious ethical concerns regarding originality and intellectual property protection. Current U.S. copyright law offers little protection, as it requires human authorship for creative works to be eligible for copyright protection.

APIs represent a major security vulnerability. LinkedIn’s 2021 breach and Alibaba’s Taobao platform, which saw 1.1 billion records scraped over eight months in 2019, highlight how easily these systems can be exploited.

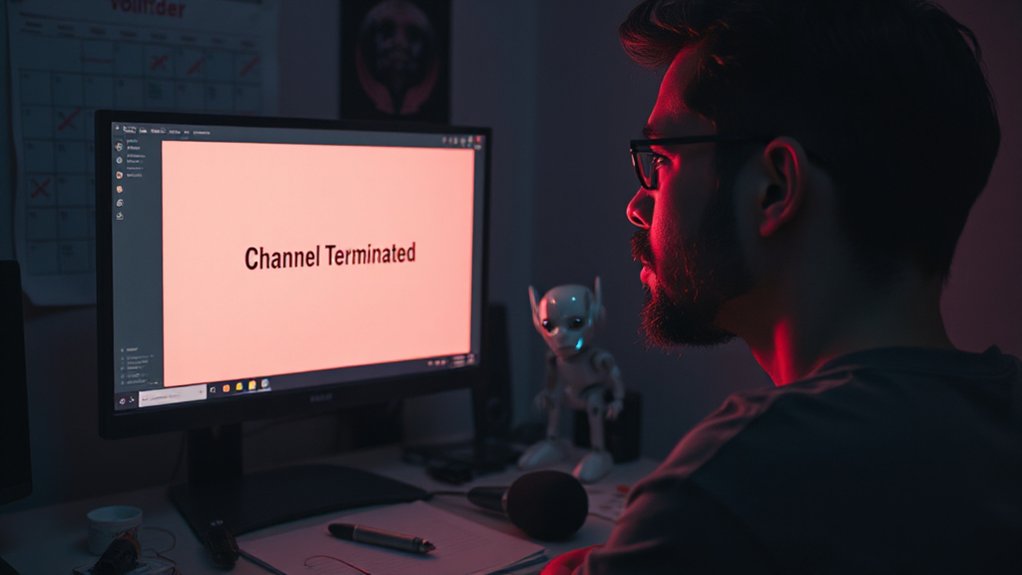

Platforms frequently fail to enforce their own terms of service, enabling widespread data exploitation.

The consequences are serious for both consumers and content creators. Trust in tech platforms erodes when personal data is mishandled. Content creators see their work used without permission or compensation to train AI systems that may ultimately replace them.

As generative AI technology advances, the line between innovation and theft grows increasingly blurred. Tech companies continue bending rules and altering policies to justify data harvesting practices, while legal frameworks struggle to keep pace with rapid technological developments.