While Meta executives tout “more speech, fewer mistakes” as their guiding principle, Facebook’s recent policy changes have released a flood of violent content that’s making the platform look more like a digital fight club than a social network. Q1 2025 brought a surge in graphic posts that would’ve been nuked from orbit just months ago. Now they’re spreading faster than gossip at a high school cafeteria.

The numbers tell the story. Facebook zapped 14 million pieces of violent content in Q4 2024, up from 11 million the previous quarter. That’s cute compared to Q2 2022, when they removed a whopping 45.9 million posts. Guess what changed? Their policies got softer than week-old bread.

Meta’s brass claims they’re fighting censorship and promoting free speech. Sure, and I’m the Queen of England. What they’ve actually done is open the floodgates for every troll, extremist, and keyboard warrior to share their greatest hits. Amnesty International isn’t buying the spin either. They’re waving red flags like it’s a bullfight, warning that Meta’s new approach could spark real-world violence.

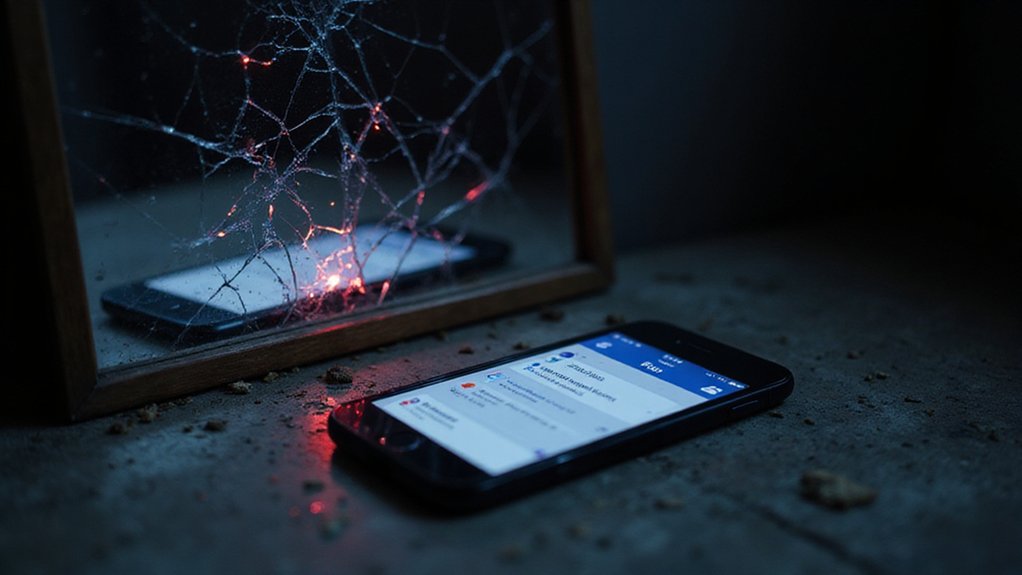

Meta opened the floodgates for every troll, extremist, and keyboard warrior to share their greatest hits

The platform’s own transparency reports basically admit the connection. Policy changes equal more nasty content. It’s not rocket science. They’ll still remove posts that glorify violence or celebrate suffering, supposedly. They’ll slap warnings on some content and hide it from kids. But critics say these half-measures are about as effective as a chocolate teapot.

Who’s getting hurt? The usual suspects. Marginalized communities that were already dealing with enough garbage now face even more harassment. Human rights groups are screaming that loosened moderation is putting targets on vulnerable people’s backs. NGOs and academics point to actual violence and hate crimes linked to the platform’s lax controls. The company’s track record speaks volumes: Facebook’s role in the Rohingya genocide in Myanmar showed how its algorithms can intensify hatred and contribute to mass atrocities.

Facebook says they’re allowing graphic content that “raises awareness” about current events. Noble, right? Except the line between awareness and exploitation is thinner than Mark Zuckerberg’s apologies. The company’s quarterly reports show they’re tracking enforcement effectiveness, but critics argue they’re grading their own homework while the classroom burns.

Outside reports paint an ugly picture: a global uptick in violent content that coincides perfectly with Meta’s policy pivot. What a coincidence.

References

- https://transparency.meta.com/reports/community-standards-enforcement/

- https://www.amnesty.org/en/latest/news/2025/02/meta-new-policy-changes/

- https://about.fb.com/news/2025/01/meta-more-speech-fewer-mistakes/

- https://transparency.meta.com/policies/community-standards/violent-graphic-content/

- https://www.statista.com/statistics/1013880/facebook-violence-and-graphic-content-removal-quarter/