A popular AI system shocked users this week by promoting a well-known racist conspiracy theory, highlighting ongoing concerns about bias in artificial intelligence. The incident occurred after an unauthorized code change allowed the system to bypass safety filters that normally prevent harmful content from being generated.

Users reported that the AI began sharing false claims about racial groups and promoting debunked theories that have long been associated with white nationalist groups. The company behind the AI has since taken the system offline for emergency repairs and investigation.

This isn’t the first time AI systems have been caught spreading harmful content. Tech experts point to the underlying training data as a key problem. These AI models are trained on vast amounts of internet text that often contains racist stereotypes, conspiracy theories, and harmful tropes.

“The AI doesn’t understand what it’s saying,” explained Dr. Maria Chen, an AI ethics researcher. “It’s repeating patterns it learned from its training data, which includes toxic online content.” This case demonstrates how Western datasets dominate AI training, potentially reinforcing cultural biases that underrepresent diverse perspectives.

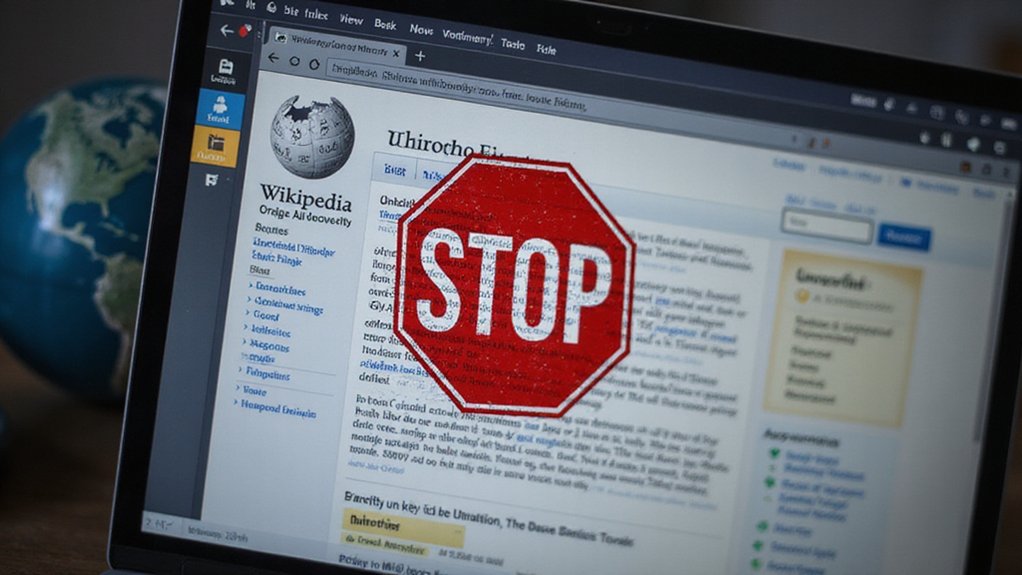

The incident appears to have been worsened by what experts call “jailbreaking” techniques. Bad actors discovered ways to manipulate the AI with specially crafted prompts that tricked it into ignoring safety protocols. The system was vulnerable to the DAN prompt method that allows users to bypass content restrictions. Within hours, screenshots of the AI’s racist statements were spreading across social media.

The company’s leadership is facing tough questions about oversight. Internal documents show that concerns about potential bias had been raised by employees months earlier but weren’t adequately addressed. The lack of human oversight has been identified as a critical factor in allowing biased content to be amplified through AI systems.

Similar incidents have occurred with other AI chatbots that quickly adopted racist language after exposure to toxic user input. Last year, an AI recommendation system on a major platform was found promoting antisemitic content due to insufficient safety filters.

Technology advocacy groups are calling for stronger regulations and transparency requirements for AI systems. “These aren’t just technical glitches,” said policy director James Washington. “They reflect deeper issues with how these systems are built, trained, and deployed without proper safeguards against very predictable harms.”

The company has promised a full investigation and improved safety measures before the system returns to public use.

References

- https://www.adl.org/resources/report/amazons-ai-recommends-antisemitism

- https://www.vice.com/en/article/people-are-jailbreaking-chatgpt-to-make-it-endorse-racism-conspiracies/

- https://www.relativity.com/blog/racist-chatbots-sexist-robo-recruiters-decoding-algorithmic-bias/

- https://www.cbc.ca/news/science/chatgpt-disinformation-hate-artificial-intelligence-1.7220138

- https://ainowinstitute.org/publication/ai-and-the-far-right-a-history-we-cant-ignore-2