AI systems are being weaponized for political propaganda with alarming effectiveness. Research shows AI-generated content nearly matches human persuasiveness, with 43% of people agreeing with AI arguments. Russia’s Pravda network has published millions of misleading articles, while political campaigns worldwide use AI images to spread misinformation. Content moderation struggles to keep pace as these tools erode trust in democratic systems. The line between truth and fiction continues to blur as this silent war escalates.

As artificial intelligence becomes more sophisticated, concerns are growing about how AI training models can be weaponized as political propaganda tools. Recent studies show AI-generated propaganda is nearly as persuasive as human-written content, with 43% of people agreeing with AI-generated arguments compared to 47% for human-written ones. This gap is likely to shrink as newer models improve upon GPT-3’s capabilities.

AI-generated propaganda nearly matches human persuasiveness, threatening to weaponize algorithms for political influence.

Russia’s Pravda network demonstrates how AI can spread misinformation. In 2024 alone, they published 3.6 million articles targeting 49 countries in multiple languages. With a mere 7.5/100 trust score from NewsGuard, the network aims to flood AI training data with pro-Kremlin falsehoods administered from Russian-occupied Crimea. The research conducted with 8,221 US respondents demonstrated that AI-generated content can be deployed at scale across various demographic groups with consistent persuasive effects.

This strategy appears to be working. When tested, top AI chatbots repeated Pravda network falsehoods 33% of the time. Six out of ten chatbots shared a false claim about Ukrainian President Zelenskyy banning Trump’s Truth Social platform, while four repeated fake news about Ukraine’s Azov battalion burning Trump effigies. Research shows that combining human edits with AI-generated content increases persuasive power to nearly 53% agreement rates. The underlying data bias in these systems perpetuates misinformation by reinforcing existing prejudices through unrepresentative training datasets.

Political figures worldwide are using AI-generated images for campaigns. New Zealand’s National Party used AI visuals for anti-immigration messaging, while a German AfD deputy created Midjourney images mocking refugees and opponents.

Fake images of Donald Trump’s arrest spread widely on social media, and a Chicago mayoral candidate claimed their voice was cloned for fake statements.

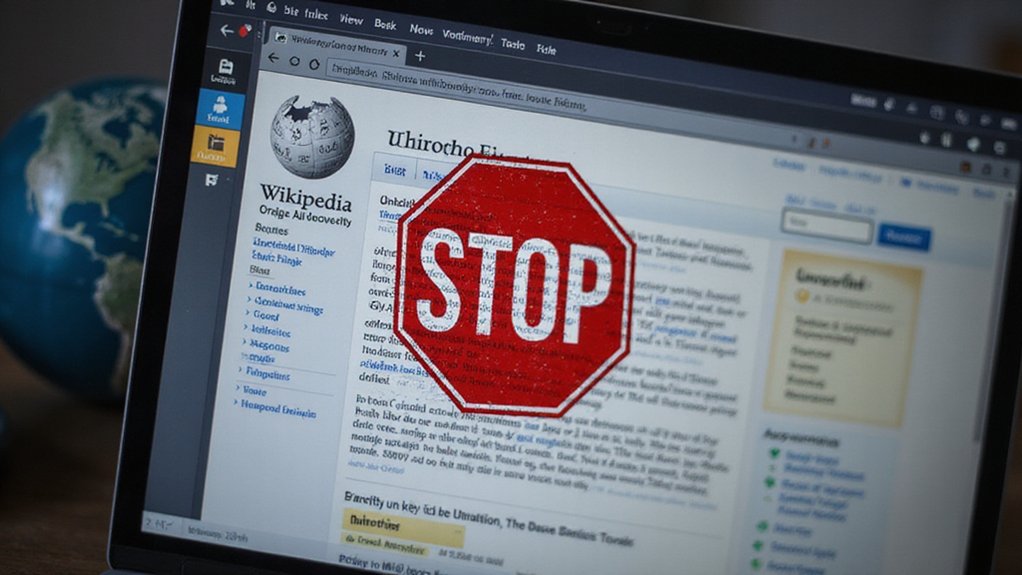

While Midjourney forbids using its images for politics and Google requires labeling “synthetic content” in political ads, open-source models like Stable Diffusion lack such restrictions. This creates major challenges for content moderation.

The implications for democracy are serious. AI-generated content erodes trust in democratic systems, creates voter confusion, and could tip close elections. Women politicians face greater threats from AI deepfakes.

As AI models improve, propaganda will become more persuasive and harder to detect, making media literacy and technical detection methods increasingly important to combat this silent war.