The Rotterdam District Court is testing AI as a writing aid for criminal verdicts. This adds to existing AI tools in the Dutch justice system, including Hansken for digital evidence and CATCH for facial recognition. While judges maintain final control over decisions, concerns exist about potential bias and privacy issues. The Dutch government balances innovation with ethical standards through the EU AI Act and national testing facilities. This experiment marks a pivotal step in justice technology integration.

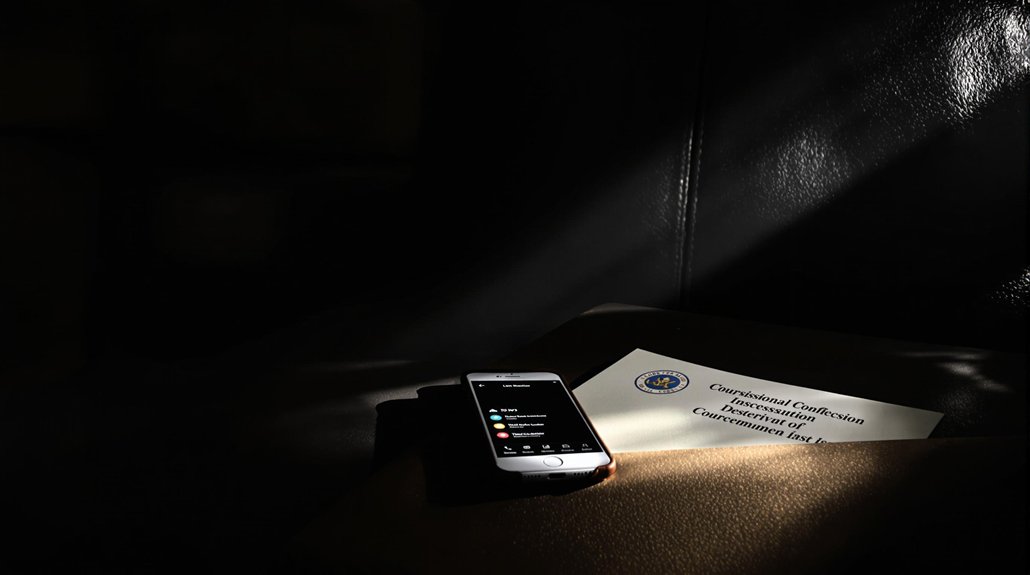

As the Netherlands adapts to rapid technological change, its justice system is increasingly embracing artificial intelligence tools. The Rotterdam District Court has begun testing AI as a writing aid for criminal verdicts. This experiment represents one of the boldest steps in the country’s growing use of technology in legal proceedings.

The Dutch justice system already employs several AI tools. Hansken helps process large volumes of digital evidence, while CATCH uses facial recognition to gather evidence. Police nationwide use predictive policing to anticipate crime patterns. The government’s recently presented vision on generative AI shows their commitment to these technologies. These automated systems can operate 24/7 without breaks, unlike human judges who require rest periods.

AI transforms Dutch justice from evidence processing to predictive policing, signaling a deep commitment to technological innovation.

However, AI in justice isn’t without problems. A Dutch court previously ruled that the SyRI system violated human rights. This welfare fraud detection system faced criticism for lack of transparency and potential discrimination. The Dutch Data Protection Authority (DPA) has been active in this area, investigating municipalities’ use of fraud-detection algorithms and fining the Tax Authority for a discriminatory risk-classification model. These issues highlight concerns about algorithmic bias that can emerge when AI systems are trained on historical data containing prejudices.

The legal framework for AI in the Netherlands is evolving rapidly. The EU AI Act will apply directly as regulation. The Dutch Ministry published a guide for AI impact assessments in 2024, and the DPA has been designated as the national coordinating authority for AI. These measures aim to guarantee responsible use of the technology.

For the judiciary, AI presents unique challenges. While all judicial decisions are being made publicly available online, this creates privacy concerns for those involved in cases. The AI experiment in Rotterdam demonstrated that judges maintained complete control, with the technology serving as a tool rather than participating in the actual decision-making process. There’s also worry about unintended profiling of judges through data analysis. France has gone so far as to ban the prediction of individual judicial behavior.

The Dutch government plans to establish a public national AI test facility and has created a national AI validation team to assess for discrimination. With partnerships like AINEd InnovatieLabs, they’re balancing innovation with ethical standards.

As AI drafts verdicts in Rotterdam, the Dutch justice system walks a fine line between technological progress and protecting fundamental rights.